Documentation

System Overview[edit | edit source]

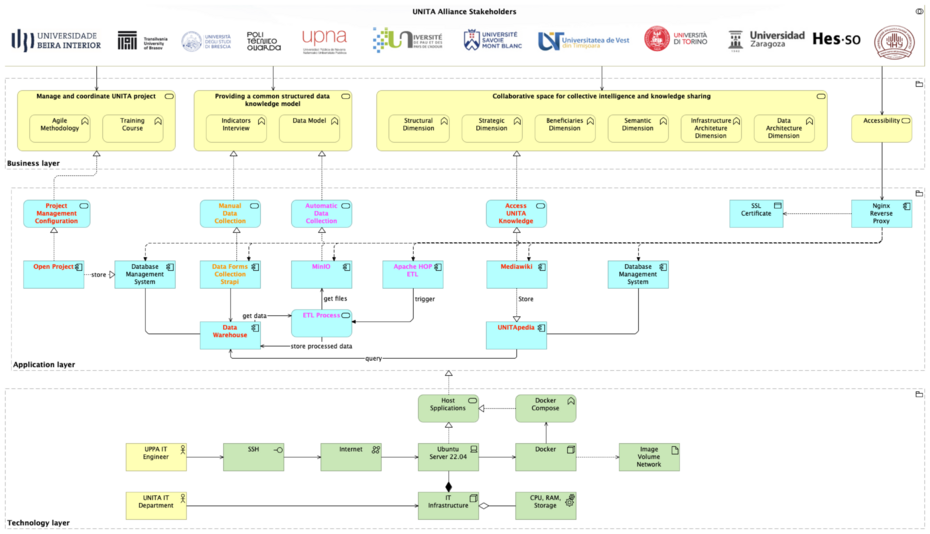

The UNITApedia system is composed of two integrated main components designed to enhance data accessibility, transparency, and collaboration among UNITA members. It connects a shared data warehouse with a MediaWiki-based front-end, creating a dynamic and scalable ecosystem for data visualization, management, and analysis.

[edit | edit source]

Acts as the central repository for structured data such as deliverables, indicators, and progress metrics. Utilizes metadata, ontology, and semantic web technologies to provide a comprehensive, interconnected view of data collected across all UNITA members. Supports efficient data centralization, organization, and analysis, ensuring a unified understanding of the data ecosystem. Backed by PostgreSQL, enabling complex queries, scalability, and robust data storage. Alongside Apache HOP as an ETL to develop powerful data pipelines.

MediaWiki-Based Front-End Interface[edit | edit source]

Provides a user-friendly system for monitoring project progress, visualizing metrics, and assessing impact. Acts as the primary user interface, powered by extensions like External Data, Scribunto, and Semantic MediaWiki. Dynamically retrieves data through its API layer, integrating seamlessly with the data warehouse. Enhances decision-making and collaboration by providing stakeholders with real-time, actionable insights. Share and collaborate with other users to extend the UNITA knowledge-base.

Key Features[edit | edit source]

- Near real-time integrated data pipeline processus:

- Utilizes robust APIs to fetch and display updated information from the PostgreSQL database.

- Near-instantaneous process from data extraction to final result display on UNITApedia.

- User-Friendly Interface:

- Built on MediaWiki, ensuring an intuitive experience for users of varying technical backgrounds.

- Extensions like Page Forms and Semantic MediaWiki simplify data input, annotation, and querying.

- Open Source:

- Designed with modularity and scalability in mind, allowing deployment across other UNITA members or similar institutions.

- Supports customization to meet unique institutional needs while adhering to UNITA’s vision.

- Dynamic Queries:

- Uses optimized prepared PostgreSQL statements and Lua scripting via MediaWiki extensions to deliver efficient and dynamic data visualization.

- Allows advanced customization of data presentation formats based on user needs.

- Scalable Architecture:

- Employs a Dockerized infrastructure for each subsystem (MediaWiki, Strapi, PostgreSQL, Apache HOP, etc.), ensuring modularity and scalability.

- Supports efficient deployment, updates, and resource allocation.

- Enhanced Collaboration and Transparency:

- Enables cross-institutional collaboration by centralizing data in the shared warehouse.

- Provides stakeholders with real-time visualizations, ensuring informed decision-making and alignment with organizational goals.

System Architecture[edit | edit source]

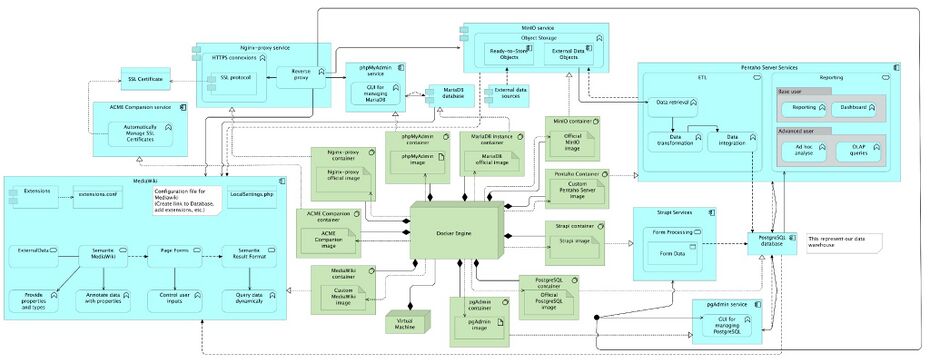

This chapter provides an overview of the UNITApedia system architecture, highlighting the containerized design, data flows, and interactions between the various services. The architecture ensures scalability, maintainability, and security, while leveraging open-source technologies to facilitate collaboration and data accessibility across the UNITA alliance.

The following considerations shaped the UNITApedia architecture:

- Modularity & Scalability

- Docker ensures each service is isolated, easily updated, and can be scaled independently if usage grows.

- Clear separation of roles (Strapi for input, Apache HOP for ETL, MediaWiki for output) streamlines development and maintenance.

- Open-Source & Extensibility

- MediaWiki: Chosen for its mature ecosystem (extensions like Semantic MediaWiki, External Data, Page Forms) and robust community support.

- PostgreSQL: Offers advanced query capabilities, reliability, and easy integration with Apache HOP.

- MinIO: An open-source, S3-compatible object store that fits seamlessly into containerized deployments.

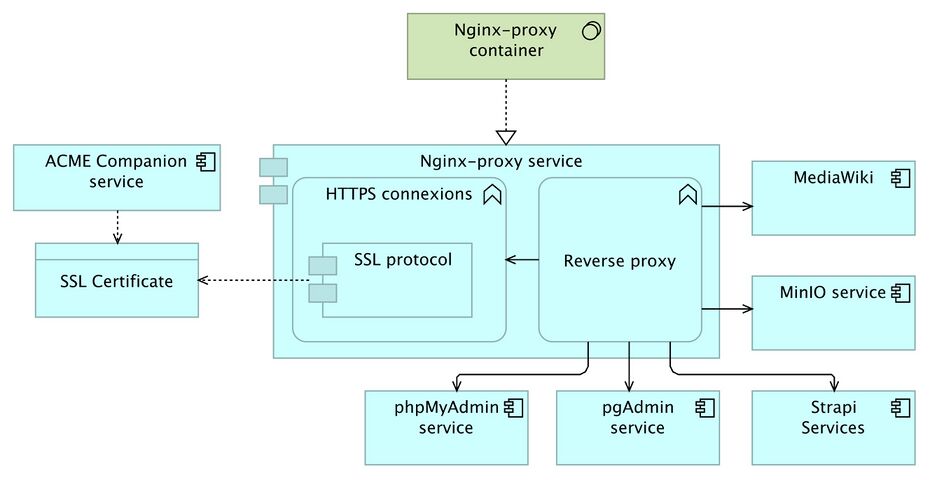

- Security & SSL

- Nginx-Proxy + ACME Companion: Provides automated certificate management and secure HTTPS connections, protecting data in transit.

- Role-Based Access: Strapi enforces form-level permissions, while MediaWiki can be configured with namespace-based access for sensitive data.

- Data Consistency & Quality

- Apache HOP ETL: Ensures data from different sources (Strapi, MinIO CSVs) is validated, cleaned, and structured before landing in the datamart.

- Semantic MediaWiki:Semantic MediaWiki allows for structured data definitions and cross-referencing, ensuring consistent reporting across tasks and indicators.

- Maintainability & Future Growth

- Each service can be updated or replaced with minimal impact on the others, thanks to Docker’s container-based isolation.

- The architecture can accommodate new data sources, additional tasks/indicators, or new alliances with minimal refactoring.

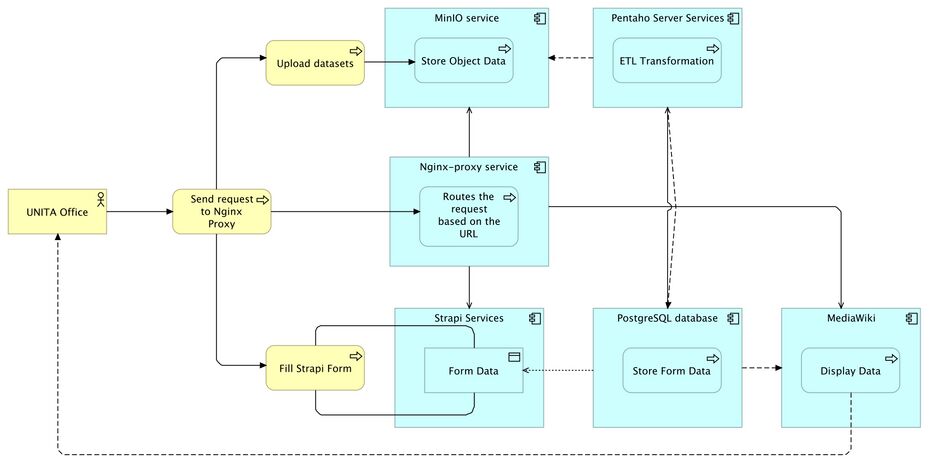

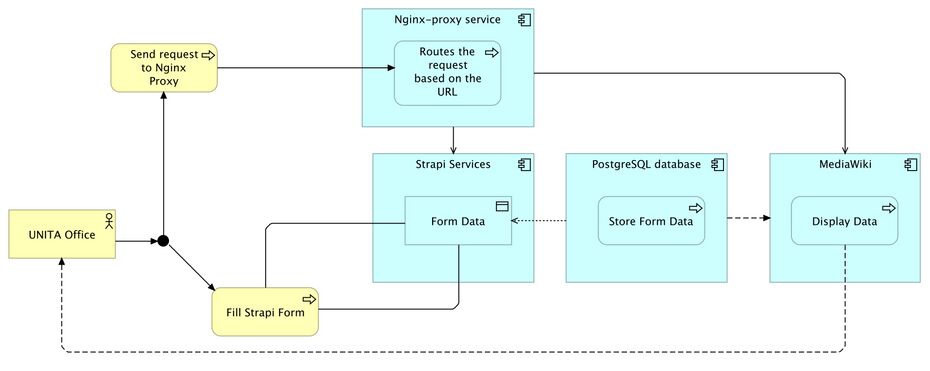

Request Flow[edit | edit source]

- User Interaction: A UNITA Office user or a Task Leader navigates to the UNITApedia URL.

- Nginx-Proxy: Receives the request over HTTPS and routes it to the appropriate container (MediaWiki, Strapi, etc.).

- Data Entry (Strapi): If the user is adding new indicator data, the form submission is stored in the Strapi database.

- ETL (Apache HOP): On a scheduled or on-demand basis, Apache HOP retrieves the new entries from Strapi (or CSV files in MinIO), applies transformations, and loads them into the datamart.

- MediaWiki Display: MediaWiki queries the datamart schema via the External Data extension to display up-to-date metrics on wiki pages or dashboards.

- Administration: pgAdmin is used by database administrators for maintenance tasks, accessible behind the Nginx-Proxy with proper credentials.

High-Level Overview[edit | edit source]

UNITApedia is composed of several interconnected services running in Docker containers, orchestrated via Docker Compose. The main components are:

Nginx-Proxy Service[edit | edit source]

- Role: Acts as a reverse proxy, routing external HTTP/HTTPS requests to the appropriate backend service based on URL paths.

- Security: Integrates with the ACME Companion service for automatic SSL certificate management and renewal, ensuring secure connections via HTTPS.

- Endpoints: Forwards traffic to MediaWiki, Strapi, phpMyAdmin, pgAdmin, MinIO, Apache HOP, and any additional admin interfaces.

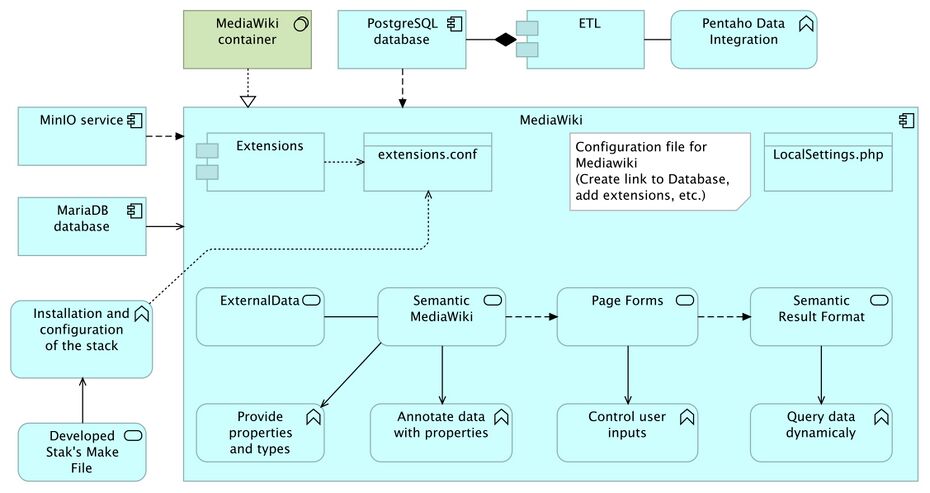

MediaWiki Container[edit | edit source]

- Primary Role: Serves as the user-facing front-end, allowing UNITA stakeholders to view, edit, and query data related to alliance activities and indicators.

- Extensions:

- External Data – Dynamically queries the PostgreSQL data warehouse (datamart) to display indicators, metrics, and other information.

- Page Forms / Semantic MediaWiki – Enables structured data input and advanced semantic queries within the wiki.

- Scribunto (Lua) – Allows for more advanced scripting and data manipulation.

- PluggableAuth + OpenID Connect – Integrates SSO (e.g., Keycloak) for institutional login.

- Configuration: Managed via LocalSettings.php, which includes namespace definitions (e.g., DataSrc and Doc) and data source connections (prepared SQL statements).

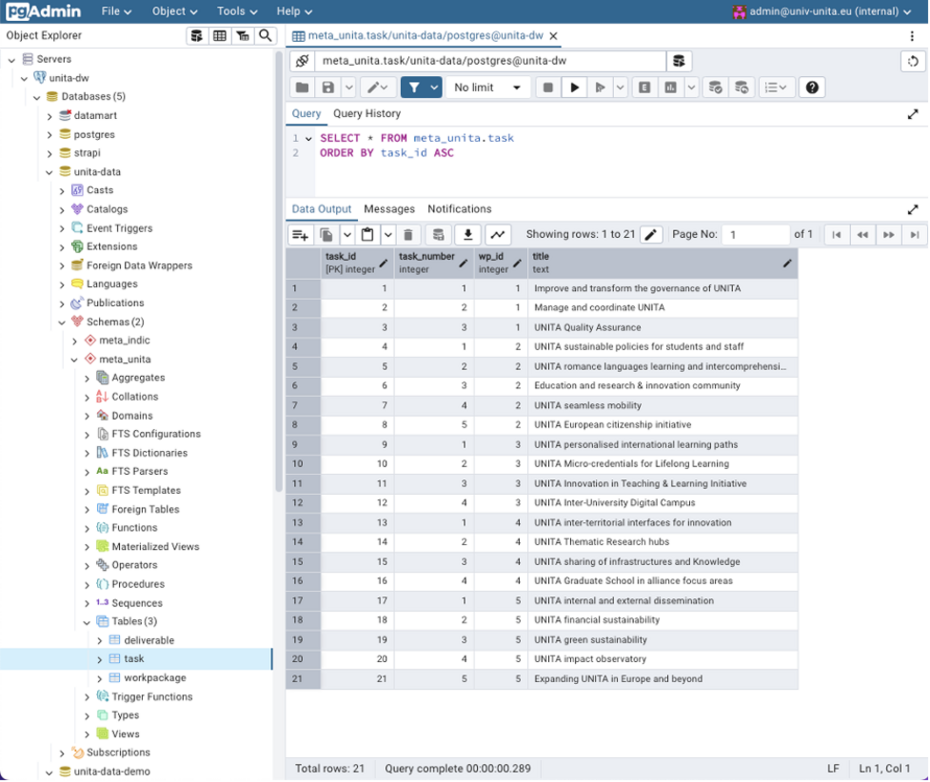

PostgreSQL (Data Warehouse)[edit | edit source]

- Role: Central repository storing structured data such as deliverables, indicators, and metrics.

- Multi-Database Setup:

- strapi: Contains raw input tables from Strapi forms.

- datamart: Holds transformed and processed data ready for MediaWiki queries.

- unita-data: Contain additional metadata or wiki configuration tables.

- Administration: Managed via pgAdmin for database operations (e.g., backups, user management).

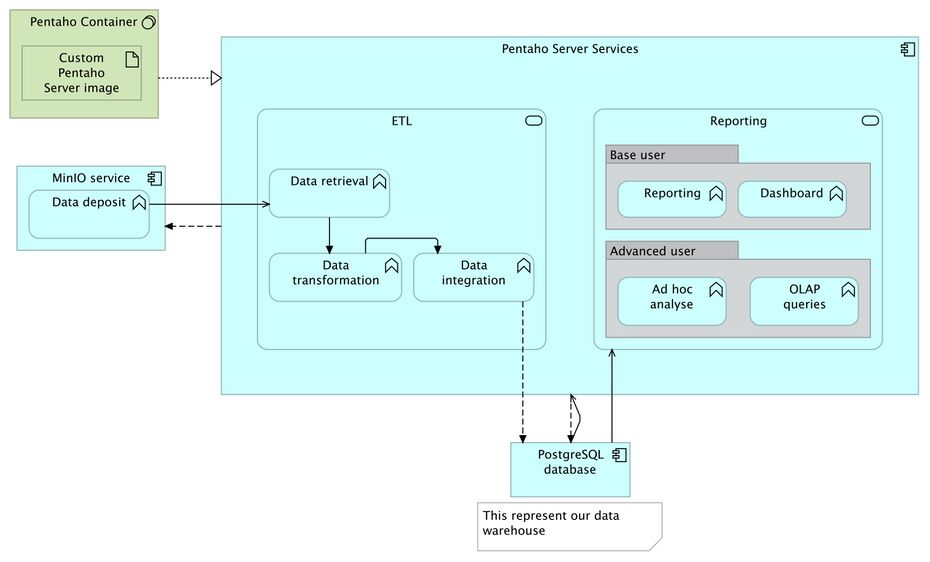

Apache HOP (ETL and Reporting)[edit | edit source]

- Processes:

- Data Retrieval: Fetches raw datasets from MinIO buckets (CSV files) or Strapi tables in PostgreSQL.

- Data Transformation: Cleans and normalizes data, ensuring consistency (e.g., date formatting, numeric checks, selecting values).

- Data Integration: Loads validated data into the datamart schema for consumption by MediaWiki.

- Scheduling & Monitoring: Deployed Apache HOP “Carte Server” allows scheduling of jobs and transformations, with logs for error handling and performance monitoring.

MinIO (Object Storage)[edit | edit source]

- Role: Stores raw data files (CSV, PDFs, images, etc.) uploaded by UNITA Offices.

- Integration: Apache HOP connects to MinIO using an S3-compatible interface, retrieving files for ETL processing.

- Organization: Multiple buckets can be created (e.g., “dev” for storing Apache HOP transformations, indicators buckets to store CSV files coming from UNITA Offices).

Strapi (Middleware / Headless CMS)[edit | edit source]

- Purpose: Provides a user-friendly interface for UNITA Offices to manually input or update indicator data.

- Data Flow: Stores raw records in its own PostgreSQLL database schema, which Apache HOP then reads, transforms, and pushes into datamart.

- APIs: Exposes REST or GraphQL endpoints if needed for external integrations or advanced use cases.

Administrative Interfaces[edit | edit source]

- phpMyAdmin: Web-based administration tool for MariaDB (if used, e.g., for certain MediaWiki tables or other services).

- pgAdmin: Used to manage PostgreSQL databases, including creation of new schemas, user roles, and backups.

Docker-Based Infrastructure[edit | edit source]

- Containerization: Each service (MediaWiki, Strapi, Apache HOP, PostgreSQL, MinIO, Nginx) runs in its own container, simplifying updates and scaling.

- Networking: Docker Compose defines an internal network allowing containers to communicate securely without exposing internal ports directly to the public internet.

- Environment Variables: Credentials and configuration details (e.g., database passwords, S3 access keys) are injected at runtime to keep them out of version control.

LocalSettings Configuration (MediaWiki)[edit | edit source]

The LocalSettings.php file is the backbone of the UNITApedia Impact Observatory’s MediaWiki installation. It drives everything from site identity to extensions, external data sources, caching, security and beyond. Below is an overview of how your current configuration supports the site’s functionality.

Basic Site Configuration[edit | edit source]

Site Identity & URLs[edit | edit source]

$wgSitename: Set via theOBSERVATORY_NAME

environment variable.$wgServer&$wgCanonicalServer: Usehttps://+DOMAIN_NAMEfrom env.$wgScriptPath: Set to"", so all URLs are relative to the webroot.$wgResourceBasePath: Mirrors$wgScriptPathfor static assets.

Locales & Protocols[edit | edit source]

- Default language is English (

$wgLanguageCode = "en"). - Shell locale forced to

C.UTF-8for consistent sorting/formatting. - Raw HTML is enabled (

$wgRawHtml = true) and an extra allowed protocol was added (https://elearn.univ-pau.fr/).

User Preferences & Authentication[edit | edit source]

Email & Notifications[edit | edit source]

- Email is fully enabled (

$wgEnableEmail,$wgEnableUserEmail,$wgEmailAuthentication). - Sender address and emergency contact are pulled from env (

MEDIAWIKI_PWD_EMAIL,MEDIAWIKI_CONTACT_EMAIL).

Login Options[edit | edit source]

- Local login via PluggableAuth is enabled (

$wgPluggableAuth_EnableLocalLogin = true). - Keycloak/OpenID Connect example remains commented out for future SSO.

Database Settings[edit | edit source]

Primary Database (MySQL/MariaDB)[edit | edit source]

- Type:

mysqlon hostmariadb. - Credentials and database name injected from

MEDIAWIKI_DB_

env vars. - Table options: InnoDB with binary charset.

Caching, File Uploads & Image Handling[edit | edit source]

Uploads & Commons[edit | edit source]

- File uploads are enabled (

$wgEnableUploads = true). - InstantCommons integration is turned on (

$wgUseInstantCommons = true). - ImageMagick is used for conversions (

$wgUseImageMagick = true, convert command at/usr/bin/convert).

File Types & Security[edit | edit source]

- A broad list of extensions is allowed: png, gif, jpg, doc, xls, pdf, pptx, svg, etc.

- A MIME-type blacklist protects against script uploads (e.g. PHP, shell scripts, MS executables).

Localization & Time Zone[edit | edit source]

- Wiki text in English; PHP shell locale

C.UTF-8. Time Zone

:$wgLocaltimezoneset toUTC, anddate_default_timezone_set('UTC')for consistency.

Security & HTTPS[edit | edit source]

Secret & Upgrade Keys

:$wgSecretKeyand$wgUpgradeKeyloaded from env vars.HTTPS Enforcement

: All traffic is forced over HTTPS ($wgForceHTTPS = true).

Skins, Permissions & User Groups[edit | edit source]

Skinning[edit | edit source]

- Default skin is Vector-2022 (

$wgDefaultSkin = 'vector-2022'), with older Vector-2011 disabled. - All users are locked onto Vector-2022 (

$wgVectorDefaultSkinVersion = '2',$wgVectorShowSkinPreferences = false).

User Rights[edit | edit source]

- Anonymous (

*

) users can read and edit pages but cannot create accounts. - Registered

user

role loses self-edit rights (CSS/JS/JSON). sysop

and custom roles (e.g. translator, recipes) have fine-grained SMW and Page Forms permissions.

Enabled Extensions[edit | edit source]

A streamlined but powerful set of extensions is loaded via wfLoadExtension():

- Arrays

- Babel

- CategoryTree

- Cargo

- Cite

- CleanChanges

- CodeEditor/CodeMirror

- ConfirmEdit

- DataTransfer

- External Data

- ECharts

- FlexDiagrams

- Gadgets

- HeaderTabs

- IframePage

- ImageMap

- InputBox

- Interwiki

- MagicNoCache

- Maps

- Math

- ModernTimeline

- MultimediaViewer

- Network

- Nuke

- Page Forms

- PageImages

- PageSchemas

- ParserFunctions

- PdfHandler

- PluggableAuth

- Poem

- Renameuser

- ReplaceText

- SecureLinkFixer

- Scribunto

- SpamBlacklist

- SyntaxHighlight_GeSHi

- TabberNeue

- TemplateData

- TemplateWizard

- TextExtracts

- TitleBlacklist

- Translate

- TreeAndMenu

- UniversalLanguageSelector

- Variables

- VisualEditor

- Widgets

- WikiEditor

Mapping & Charts[edit | edit source]

- ECharts for rich, JS-driven charts.

- OpenStreetMap for coordinate-based maps.

Semantic MediaWiki Stack[edit | edit source]

wfLoadExtension('SemanticMediaWiki')andenableSemantics(getenv('DOMAIN_NAME')).- SMW add-ons: SemanticResultFormats, SemanticCompoundQueries, SemanticFormsSelect.

- Semantic links enabled in the

DATASRC

namespace.

External Data Sources & Query Files[edit | edit source]

Local file source DDD

pointing at/home/hub/data/files/dev/.- PostgreSQL source ID for live lookups.

- GET-allowance turned on (

$wgExternalDataAllowGetters = true). - Custom query includes:

query_meta_unita.php,query_meta_indicators.php,query_raw.php,query_count.php,query_DEMO_DEC24.php,query_DEMO_JAN25.php, and anindicators.phpaggregator.

Custom Namespaces[edit | edit source]

Doc

(800/801) andDataSrc

(810/811) namespaces defined for structured separation of docs vs. ingested data.- A

Recipes

(805/806) namespace for specialized content.

Mail & Logging[edit | edit source]

SMTP

: Local Postfix onlocalhost:25, no auth, unencrypted.- Mail debug logs written to

/tmp/mediawiki-mail.log.

Debugging & Development[edit | edit source]

Error Display

: All exception details, backtraces, SQL errors, and development warnings are enabled ($wgShowExceptionDetails = true,$wgShowDebug = true, etc.) for rapid troubleshooting.

Docker-Compose File Configuration[edit | edit source]

The UNITApedia system is deployed using Docker Compose (version 3), which orchestrates all the services required for the application. This configuration ensures modularity, scalability, and clear separation between components. Below is the updated Docker Compose configuration.

Version and Networks[edit | edit source]

Version

: Compose file uses version3

.Networks

: A custom networkobservatory_netdefined with thebridge

driver.

networks:

observatory_net:

driver: bridge

Volumes[edit | edit source]

Persistent storage is defined through several named volumes:

volumes: mariadb: dw-data: pgadmin-volume: html: certs: acme: minio:

Services[edit | edit source]

Each service is defined with specific images, settings, and dependencies.

mariadb:

image: mariadb:10.11

container_name: mariadb

restart: always

networks:

- observatory_net

expose:

- "3306"

volumes:

- mariadb:/var/lib/mysql

- ./services/mariadb/_initdb.mariadb/:/docker-entrypoint-initdb.d/

env_file:

- .env

- ./services/mariadb/.env

postgres:

image: postgres:14.0-alpine

container_name: postgres

restart: unless-stopped

networks:

- observatory_net

expose:

- "5432"

ports:

- "5432"

volumes:

- dw-data:/var/lib/postgresql/data/

- ./services/strapi/strapi.dump:/tmp/strapi.dump

- ./services/postgres/_initdb.pg/:/docker-entrypoint-initdb.d/

env_file:

- .env

- ./services/postgres/.env

mediawiki:

build:

context: ./services/mediawiki

dockerfile: MediaWiki.Dockerfile

container_name: mediawiki

restart: always

networks:

- observatory_net

expose:

- "80"

volumes:

- ./services/mediawiki/LocalSettings.php:/var/www/html/LocalSettings.php:ro

- ./services/mediawiki/composer.local.json:/var/www/html/composer.local.json

- ./services/mediawiki/images/:/var/www/html/images/:rw

- ./services/mediawiki/resources/assets/:/var/www/html/resources/assets/

- ./services/mediawiki/extensions:/var/www/html/extensions/

- ./services/mediawiki/mediawiki/:/var/www/html/mediawiki/:ro

env_file:

- .env

- ./services/mediawiki/.env

environment:

VIRTUAL_HOST: ${DOMAIN_NAME}

VIRTUAL_PATH: /

VIRTUAL_PORT: "80"

LETSENCRYPT_HOST: ${DOMAIN_NAME}

LETSENCRYPT_EMAIL: ${LETSENCRYPT_EMAIL}

depends_on:

- mariadb

- postgres

strapi:

build:

context: ./services/strapi

dockerfile: Strapi.Dockerfile

image: strapi/strapi:latest

container_name: strapi

restart: unless-stopped

networks:

- observatory_net

expose:

- "1337"

volumes:

- ./services/strapi/config:/opt/app/config

- ./services/strapi/src:/opt/app/src

- ./services/strapi/package.json:/opt/package.json

- ./services/strapi/yarn.lock:/opt/yarn.lock

- ./services/strapi/.env:/opt/app/.env

- ./services/strapi/public/uploads:/opt/app/public/uploads

env_file:

- /data/impact-observatory/services/strapi/.env

environment:

VIRTUAL_HOST: ${DOMAIN_NAME}

VIRTUAL_PATH: /strapi/

VIRTUAL_DEST: /

VIRTUAL_PORT: "1337"

LETSENCRYPT_HOST: ${DOMAIN_NAME}

LETSENCRYPT_EMAIL: ${LETSENCRYPT_EMAIL}

depends_on:

- postgres

command: /bin/sh -c "yarn strapi ts:generate-types && yarn develop"

hop-web:

image: apache/hop-web:latest

container_name: hop-web

restart: unless-stopped

ports:

- "8080"

volumes:

- ./services/hop-web/projects:/project

- ./services/hop-web/tomcat/config:/config

env_file:

- .env

environment:

VIRTUAL_HOST: ${DOMAIN_NAME}

VIRTUAL_PATH: /hop/

VIRTUAL_DEST: /

VIRTUAL_PORT: "8080"

LETSENCRYPT_HOST: ${DOMAIN_NAME}

LETSENCRYPT_EMAIL: ${LETSENCRYPT_EMAIL}

networks:

- observatory_net

depends_on:

- postgres

minio:

image: minio/minio:latest

container_name: minio

restart: always

networks:

- observatory_net

ports:

- "9000"

- "9001"

volumes:

- ./services/minio/data:/data

env_file:

- .env

- ./services/minio/.env

environment:

VIRTUAL_HOST: ${DOMAIN_NAME}

VIRTUAL_PATH: /minio/

VIRTUAL_DEST: /

VIRTUAL_PORT: "9001"

MINIO_BROWSER_REDIRECT_URL: https://unitapedia.univ-unita.eu/minio

command: server /data --console-address ":9001"

phpmyadmin,pgadmin,nginx-proxy, andacme-companionfollow similar patterns for image, ports, volumes, networks, and virtual-host environment variables.

Makefile Configuration[edit | edit source]

The Makefile is designed to automate common tasks involved in managing the UNITApedia deployment. By reading settings from an .env file and an extensions.config file, it enables consistent builds, extension installation, maintenance operations, and backups.

Environment and Extension Configuration[edit | edit source]

Environment Inclusion

:

TheMakefilebegins by including the.envfile and./services/mediawiki/.env, so that environment-specific variables (e.g., database credentials, domain names) are available throughout the build process.

Extensions Configuration

:

Theextensions.configfile lists extension names and versions. Although the cloning logic is commented out, the Makefile can use tools likeawkto extractEXTENSION_NAMESandEXTENSION_VERSIONSfor automated fetching of MediaWiki extensions.

Core Targets[edit | edit source]

help: Show available commands and descriptions.up: Start all containers ($(DC) up -d).down: Stop all containers ($(DC) down).restart: Restart all containers.logs: Follow container logs with the last 100 lines.clean: Remove local build & backup artifacts, reset extensions directory, and prune unused Docker objects.build/build-no-ext: Build full stack and initialize MediaWiki extensions and services.mw-install: Install Composer dependencies and runmaintenance/update.phpinside the MediaWiki container.mw-initialize: First-time Composer update (no scripts) and post-install scripts, then runmaintenance/update.php.mw-dev-copy: Copy the MediaWiki container’s/var/www/htmlcontents into a local folder for development.backup: Run bothbackup-mariadbandbackup-postgres.backup-mariadb: Dump the MariaDB database into a timestamped file underservices/mariadb/backups.backup-postgres: Dump the PostgreSQL database into a timestamped file underservices/postgres/backups.

# Load environment variables

include .env

include ./services/mediawiki/.env

DC = docker-compose

DEFAULT_EXT_VERSION = REL1_39

.DEFAULT_GOAL := help

help: ## Show help

@echo "Available commands:"

@grep -E '^[a-zA-Z_-]+:.*?## .*$$' $(MAKEFILE_LIST) | sort | awk 'BEGIN {FS = ":.*?## "}; {printf " \033[36m%-20s\033[0m %s\n", $$1, $$2}'

# ───── Docker Lifecycle ─────

up: ## Start all containers

$(DC) up -d

down: ## Stop all containers

$(DC) down

restart: ## Restart all containers

$(DC) down && $(DC) up -d

logs: ## Show container logs

$(DC) logs -f --tail=100

clean: ## Remove local build & backup artifacts

rm -rf mediawiki_container_folder services/**/backups

rm -rf services/mediawiki/extensions && mkdir -p services/mediawiki/extensions

docker system prune -a --force

build: mw-extensions ## Build full stack, install MediaWiki extensions, and start everything

$(DC) build

make mw-initialize

build-no-ext: ## Build full stack, install MediaWiki extensions, and start everything

$(DC) build

make mw-initialize

# ───── MediaWiki ─────

mw-install: ## Install composer and update mediawiki (running maintenance script)

$(DC) up -d

sleep 10

$(DC) exec mediawiki composer install

$(DC) exec mediawiki php maintenance/update.php

mw-initialize: ## First-time Composer install with no scripts (safe for fresh builds)

$(DC) up -d

sleep 10

@echo "📦 Composer update (no-scripts)..."

$(DC) exec mediawiki composer update --no-dev --prefer-dist --optimize-autoloader --no-scripts

@echo "⚙️ Running post-install/update scripts manually (if any)..."

$(DC) exec mediawiki composer run-script post-update-cmd || true

@echo "🧹 Running MediaWiki update.php..."

$(DC) exec mediawiki php maintenance/update.php

mw-dev-copy: ## Copy MediaWiki container contents locally (for dev)

rm -rf mediawiki_container_folder

$(DC) cp mediawiki:/var/www/html/. mediawiki_container_folder/

# ───── Database Backups ─────

backup: ## Backup all databases

make backup-mariadb

make backup-postgres

backup-mariadb:

mkdir -p services/mariadb/backups

$(DC) exec database sh -c "mysqldump -u$$MARIADB_USER -p$$MARIADB_PWD $$MEDIAWIKI_DB_NAME" > services/mariadb/backups/backup_$(shell date +%F_%T).sql

backup-postgres:

mkdir -p services/postgres/backups

$(DC) exec datawarehouse pg_dump -U $$DATABASE_USERNAME $$DATABASE_NAME > services/postgres/backups/backup_$(shell date +%F_%T).sql

Data Architecture[edit | edit source]

This chapter outlines the data architecture of UNITApedia, which is pivotal for ensuring that data is consistent, reliable, and readily accessible for the Impact Observatory. It explains how the design supports accurate and timely monitoring and evaluation of the university alliance's impact. Covering the complete data lifecycle—from data ingestion and modeling to processing, storage, and access. It details the systems and processes involved in managing data flow, establishes governance and security measures, and discusses strategies for scalability and performance optimization.

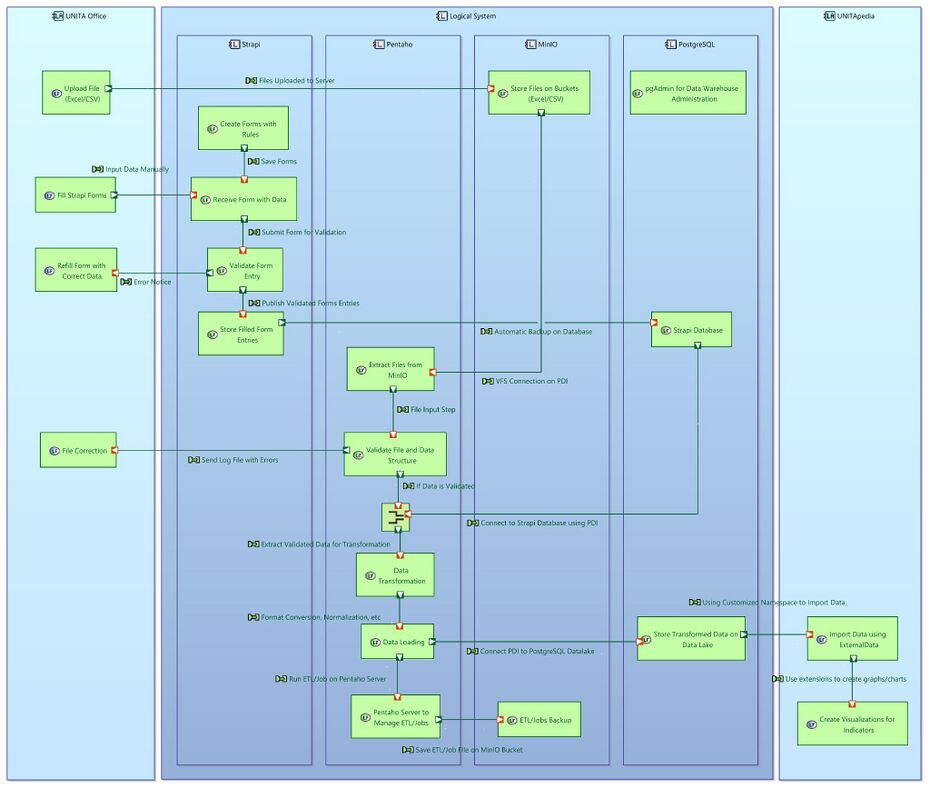

Data Sources and Ingestion[edit | edit source]

Data Sources[edit | edit source]

- Manually

- Strapi Input Forms: Certain UNITA activities (e.g., task-based deliverables) require manual data entry. Authorized users in each UNITA office submit relevant metrics and progress updates through Strapi forms.

- Semi-Automatically

- MinIO Uploads (CSV): UNITA offices may upload internal data from local databases (e.g., student mobility records, research outputs, events) into MinIO buckets for automatic ingestion into the Datawarehouse.

- Publicly Available Data Sets: Data from external APIs (e.g., Moodle, Erasmus+ portals) or open data repositories may be incorporated for broader impact analysis or benchmarking.

Data Ingestion Methods[edit | edit source]

ETL Pipeline (Semi-automatic – MinIO)

- Apache HOP Integration: Apache HOP serves as the primary Extract-Transform-Load (ETL) tool. With designed jobs it periodically checks all the configured MinIO buckets for each indicator where CSV or other structured files are uploaded by UNITA partners.

- Data Transformation: Once Apache HOP detects new files in MinIO, it cleanses and transforms the data according to predefined mappings and rules (e.g., converting date formats, normalizing institution names).

- Loading into Data Warehouse: After validation, the transformed data is loaded into PostgreSQL, ensuring consistent schemas and reliable storage. Any errors or exceptions (e.g., missing columns, incorrect data types) are logged and reported back to the relevant partners.

ETL Pipeline (Manual – Strapi forms)

- User Submission: For data that cannot be automatically generated, UNITA offices fill out Strapi forms for each indicator.

- Validation and Approval: Basic validation rules (e.g., mandatory fields, numeric ranges) are applied at form submission. Where needed, designated coordinators such as Task Leaders or Project Managers can review and approve entries before they are transferred to Apache HOP for transformation and integration.

- Data Transformation: Once Apache HOP detects new entries in the Strapi database on PostgreSQL, it cleanses and transforms the data according to predefined mappings and rules (e.g., converting date formats, normalizing institution names).

- Loading into Data Warehouse: After validation, the transformed data is loaded into the PostgreSQL Datawarehouse, ensuring consistent schemas and reliable storage. Any errors or exceptions (e.g., missing columns, incorrect data types) are logged and reported back to the relevant partners.

Batch vs. Near real-time Ingestion

- Batch Frequency: In most cases, ingestion jobs run on a scheduled basis—daily or weekly—depending on data volume and the nature of the indicators. For example, monthly metrics on student mobility may only require a weekly refresh.

- On-Demand Updates: When critical data (e.g., newly completed deliverables, urgent progress metrics) must be reflected quickly in dashboards, users can trigger an on-demand ETL job via the Apache HOP server.

Data Modeling and Storage[edit | edit source]

Conceptual Data Model[edit | edit source]

Task[edit | edit source]

- Represents a high-level work package or sub-project (e.g., T1.2, T1.3, T2.3).

- Each Task can have multiple Indicators associated with it.

Indicator[edit | edit source]

- A specific metric or measure used to track progress and impact (e.g.,

Number of UNITA offices established

,Percentage of deliverables submitted on time

). - Attributes include:

- ID: Unique identifier.

- Task Reference: Links the Indicator to a specific Task (e.g., T1.2).

- Description: Brief text describing what the Indicator measures.

- Unit of Measurement: Defines whether the Indicator is a percentage, numeric count, or other format.

- Source of Data: Describes where the raw data originates (e.g.,

EC Platform

,UNITA Offices

,Strapi forms

,MinIO CSVs

). - Frequency: How often the data is expected/required (e.g., annual, monthly, after each event).

- Baseline: The initial value at the start of the project (e.g., Baseline 2022).

- Target: The goal to be reached by a certain date (e.g., Target 2027).

- Parent Indicator (optional): References another Indicator if there is a hierarchical or “roll-up” relationship (e.g., sub-indicators summing to a main indicator).

Example Data Flow[edit | edit source]

Strapi or MinIO → Data is collected for each Indicator (e.g., number of participants at an event).Apache HOP → Cleans/transforms the data and inserts/updates rows in datamart.indicatorMediaWiki → Uses the External Data extension to query datamart tables (e.g., t126, t125) and store or display them in wiki pages.

Physical Data Model (PostgreSQL)[edit | edit source]

- Database Organization

- Strapi Database

- Stores form entries and raw indicator data before processing.

- Table examples: t121s, t122s, etc.

- unita-data Database

- Holds metadata used by MediaWiki.

- Includes tables or configurations for the wiki’s semantic extensions.

- datamart Database

- Core location for transformed and aggregated data coming from Apache HOP.

- Example tables: public.t126, public.t127, etc. (each corresponding to a particular indicator set).

- Strapi Database

Data Access and Consumption[edit | edit source]

MediaWiki for Data Retrieval[edit | edit source]

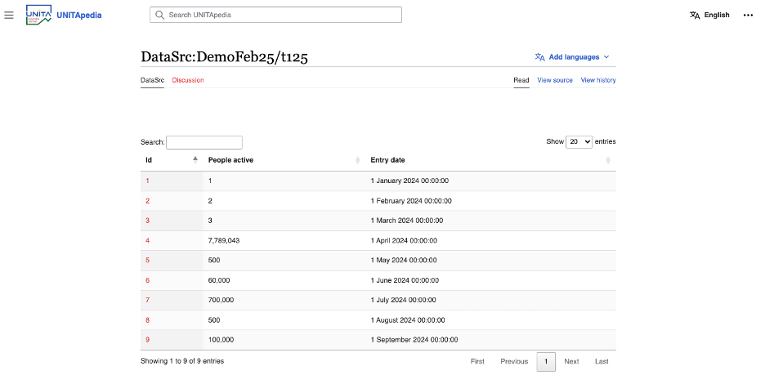

MediaWiki serves as the primary front-end for displaying and interacting with UNITApedia data. Its External Data extension allows the wiki to query the PostgreSQL datamart directly, retrieving the latest processed data (e.g., indicators, event counts, user engagement metrics).

External Data Extension[edit | edit source]

$wgExternalDataSources['DW_UNITAData_DEMOJAN25_T125'] = [

'server' => 'datawarehouse',

'type' => 'postgres',

'name' => 'datamart',

'user' => getenv('DATABASE_USERNAME'),

'password' => getenv('DATABASE_PASSWORD'),

'prepared' => <<<'POSTGRE'

SELECT * FROM public.t125 ORDER BY id ASC;

POSTGRE

];

- Defines a named data source (

DW_UNITAData_DEMOJAN25_T125) pointing to the datamart database. - Uses prepared SQL statements for efficient and secure queries.

- Data retrieval is performed via parser functions like

#get_db_datato pull records into wiki pages.

Custom Namespaces[edit | edit source]

DataSrc(ID 810) is used for storing and managing data imports.Doc(ID 800) holds documentation or supplementary content.- These namespaces help organize data separately from content pages, and control access/edit permissions as needed.

Example Page for Data Import[edit | edit source]

{{#get_db_data:

db=DW_UNITAData_DEMOJAN25_T125

|data=id=id,People_active=people_active,entry_date=date

|cache seconds=0

}}

{{#store_external_table: DW_UNITAData_DEMOJAN25_T125

|id={{{id}}}

|People_active={{{People_active}}}

|entry_date={{{entry_date}}}

}}

{{#ask: [[-Has subobject::{{FULLPAGENAME}}]]

|?id

|?People_active

|?entry_date

|class=datatable

|format=datatables

|mainlabel=-

|header=show

}}

#get_db_datafetches rows fromdatamart.public.t125.#store_external_tablewrites these rows into Semantic MediaWiki subobjects, enabling semantic queries.#askdisplays the results in a dynamic DataTables format.

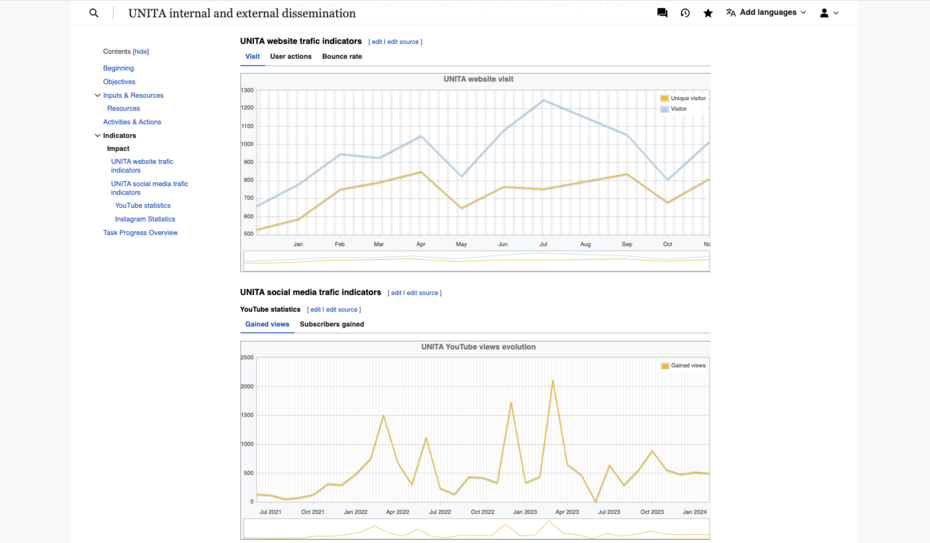

MediaWiki for Dashboards and Reports[edit | edit source]

MediaWiki’s flexibility allows you to build custom dashboards for stakeholders using a combination of extensions (e.g., PageForms, Scribunto, Cargo, Maps, Charts). You can present numeric indicators, graphs, and charts inline on wiki pages.

MediaWiki-Based Dashboards[edit | edit source]

- Charts and Graphs: Extensions like Graph, Maps, or Timeline can visually represent indicator trends (e.g., monthly website traffic, number of participants at events).

- Interactive Tables: The DataTables format (shown above) enables sorting, filtering, and pagination of tabular data.

Key Performance Indicators (KPIs)[edit | edit source]

- The system tracks various KPIs such as

Percentage of deliverables submitted on time

,Number of participants in matching events

, andWebsite traffic volume

. - These metrics can be displayed as gauges, time-series charts, or tables, depending on stakeholder needs.

Data Governance, Quality, and Security[edit | edit source]

Data Quality Assurance[edit | edit source]

Validation Rules[edit | edit source]

- Strapi Forms

- Field-Level Validation: Certain fields (e.g., numerical indicators, date fields) must conform to a predefined format or fall within acceptable ranges. If a value is invalid, the user is prompted to correct it before the data is stored.

- Review & Publish Step: Strapi includes a

Published

button that serves as an extra checkpoint. Users must explicitly publish each entry after reviewing it, ensuring that data is accurate and complete before it proceeds to the transformation process in Apache HOP.

- Apache HOP Transformations

- Additional checks occur when data is moved from Strapi or MinIO into the datamart. These checks may include data type conversions, date validations, and referential integrity (e.g., matching Task IDs to existing records such as T1.2, T1.3).

- Records that fail validation can be flagged or routed to a separate output for manual review and correction.

Error Handling and Logging[edit | edit source]

- Transformation Logs: Apache HOP logs each step of the ETL process. If data is malformed or fails a validation check, the record is either flagged or routed to an

error output

step for manual review. - Notifications: Administrators (or designated data stewards) can receive email or dashboard alerts when ETL jobs fail or encounter anomalies.

- Rollback/Correction: Erroneous data can be corrected in Strapi or CSV files and reprocessed. Historical error logs help identify recurring issues (e.g., formatting mistakes from a specific partner).

Governance Model[edit | edit source]

The governance model defines roles, responsibilities, and processes to ensure data integrity and proper stewardship of indicator definitions.

Roles and Responsibilities[edit | edit source]

- UNITA Office (CRUD on Alliance Level for Their University):

- Can create, read, update, and delete records for their institution’s indicators.

- Responsible for ensuring that data submitted (e.g., participant counts, events) is accurate and timely.

- Task Leader (Read-Only at Task Level):

- Can monitor and view data for all universities participating in their assigned task(s) (e.g., T2.3).

- Typically does not edit or delete data, but may request corrections or clarifications from the UNITA Offices.

- Project Manager (CRUD at All Levels):

- Has the highest level of access, capable of modifying any indicator data across the alliance.

- Primarily uses this access for oversight or emergency corrections, while day-to-day data entry is handled by UNITA Offices.

Version Control of Data Definitions[edit | edit source]

- Indicator Definitions: Each indicator (e.g., T1.2.5, T2.3) may evolve over time. Changes to definitions—such as calculation rules, target values, or frequency—should be documented and versioned.

- MediaWiki Tracking: Edits to indicator documentation pages (in the

Docnamespace) can be tracked via MediaWiki’s revision history, providing a transparent audit trail.

Data Security[edit | edit source]

Access Control[edit | edit source]

- Database Roles and Privileges:

- Row-level or schema-level security can be configured if certain data must be restricted to specific roles.

- Application-Level Permissions:

- Strapi can enforce role-based access, ensuring only UNITA Office roles can submit or update data.

- MediaWiki’s namespaces (e.g.,

DataSrc,Doc) can be locked down to specific groups or user roles for editing, while read access might be more widely available.

Authentication/Authorization[edit | edit source]

- MediaWiki: Users log in to access pages or to perform data-related actions. In a production environment, Single Sign-On (SSO) or OAuth could be integrated to streamline user management.

- Strapi: Form submissions require an authenticated user with the correct permissions.

- Apache HOP Carte: Administrator credentials are required to deploy and schedule transformations.

Datawarehouse Compartmentalization[edit | edit source]

The UNITApedia Datawarehouse is architected to clearly separate different types of data through the use of multiple databases and schemas. Each database within the data warehouse serves a distinct purpose, and within each database, data is organized into logical schemas containing related tables. For example:

- Strapi Database:

- Contains raw input data from Strapi forms.

- Schemas in this database hold tables with initial, untransformed indicator entries.

- Datamart Database:

- Stores transformed and processed data ready for consumption by MediaWiki.

- Within the datamart, tables are organized under one or more schemas (commonly the public schema) such as public.t125, public.t126, etc.

- Additional Databases:

- The unita-data database contains metadata and configuration details used by MediaWiki.

This multi-database, multi-schema design enables:

- Isolation: Raw data is kept separate from processed data, reducing the risk of accidental overwrites and ensuring data integrity.

- Security: Different access controls can be applied at both the database and schema levels, so only authorized roles can access or modify sensitive data.

- Maintainability: Logical separation simplifies performance tuning, backup strategies, and data governance by grouping related tables together.

Implementation Plan[edit | edit source]

Development Environment[edit | edit source]

The system is built on a modular, containerized infrastructure leveraging the following components:

- Operating Environment: Linux-based servers with Docker for containerization and orchestration.

- Programming Environment: MediaWiki and Strapi as primary application layers running on Node.js and PHP, respectively.

- Database: PostgreSQL for structured data and analytics.

- ETL Pipeline: Managed by Apache HOP, which integrates external data sources like MinIO into PostgreSQL.

- Reverse Proxy: Nginx-proxy handles HTTPS, URL routing, and performance optimization.

- Semantic Data Tools: Semantic MediaWiki and ontology-driven extensions to enable inferencing and semantic data handling.

- Development Tools: Node.js, Yarn, and Alpine Linux for lightweight builds and dependency management.

Steps to Implement[edit | edit source]

- Docker Setup:

- Configure

docker-compose.ymlto define containers for MediaWiki, Strapi, PostgreSQL, Apache HOP, MinIO, and Nginx-proxy. - Use environment variables to manage sensitive data such as API keys and database credentials securely.

- Deploy the containers and verify that all services are correctly orchestrated and networked.

- MediaWiki Deployment:

- Install MediaWiki and configure

LocalSettings.phpwith:- Connection details for PostgreSQL (

host,database name,user credentials). - Enabled extensions such as Scribunto, Semantic MediaWiki, and External Data.

- Connection details for PostgreSQL (

- Implement namespaces for better content organization:

DOCandDOC_TALKfor project documentation.DATASRCandDATASRC_TALKfor data source references.

- Configure file uploads, multi-language support, and permissions for user roles.

- Strapi Setup:

- Build and deploy Strapi using the provided

Dockerfile. - Configure Strapi with:

- Database: PostgreSQL, with connection settings in

database.ts. - API Tokens: Secure endpoints with

API_TOKEN_SALT. - Roles and Permissions: Define roles and permissions for form data inputs and API access.

- Database: PostgreSQL, with connection settings in

- Validate form creation and ensure APIs are accessible for both MediaWiki and PostgreSQL.

- ETL Configuration:

- Configure Apache HOP to set up ETL processes:

- Extract raw data from sources like MinIO or external file storage (Nextcloud).

- Transform the data into a standardized format suitable for PostgreSQL.

- Load the transformed data into the data warehouse.

- Schedule regular ETL jobs and test data integrity after each run.

- Ontology Integration:

- Implement ontology-based semantic relations in MediaWiki using Semantic MediaWiki.

- Ensure alignment of relational schema with ontology entities (e.g., WorkPackages, Tasks, Deliverables).

- Nginx Reverse Proxy Configuration:

- Configure Nginx-proxy to handle HTTPS, route traffic to containerized services:

- Enable SSL encryption with ACME Companion for automatic certificate management.

- Configure caching to improve response times for static resources.

- Integration Testing:

- Test inter-service communication (e.g., MediaWiki querying PostgreSQL, Strapi APIs).

- Perform load and stress testing to ensure performance under concurrent users and large datasets.

- Deployment:

- Deploy containers in a production environment with robust resource allocation.

- Secure connections using SSL certificates and Nginx-proxy configurations.

MediaWiki Configuration[edit | edit source]

- Site Name: UNITApedia.

- Database:

- Type: PostgreSQL.

- Host:

datawarehouse(Docker service). - Name, user, password: Retrieved securely via environment variables.

- Uploads and ImageMagick: Enabled with a memory limit of 512 MB.

- Languages: Default set to English with UTF-8 support.

- Permissions: Restricts editing and account creation to authorized roles.

- Extensions: Loaded with configurations for semantic data handling and external data sources.

- External Data Sources: Configured to connect to the data warehouse for PostgreSQL queries.

- Namespaces:

DOC,DOC_TALK,DATASRC,DATASRC_TALK.

Docker Deployment[edit | edit source]

The system uses a docker-compose.yml file with the following services:

- PostgreSQL: Stores structured data and analytics queries to both MediaWiki and Strapi.

- MediaWiki: Hosts the wiki application.

- Volumes:

LocalSettings.php (read-only) extensions (all installed extensions) images (upload directory)

- Strapi: Deployed as a Node.js application using the provided

Dockerfile.- Configurations:

* Uses PostgreSQL for data storage with secure SSL connections. * Exposes APIs for MediaWiki and external consumers.

- Nginx-proxy: Handles HTTPS connections and reverse proxying.

- Apache HOP: Processes raw data and integrates it into the warehouse.

- MinIO: Stores raw data from UNITA Offices.

Maintenance[edit | edit source]

- Backup Strategies:

* Schedule cron jobs for daily backups of PostgreSQL and MediaWiki configurations.

Security[edit | edit source]

- Secure API endpoints with JWT tokens and SALT configurations.

- Enforce HTTPS for all communications via Nginx-proxy.

- Use environment variables for sensitive data like database credentials.